Breaking News

Popular News

Learn how to optimize ChatGPT API response times by understanding factors affecting performance, implementing efficient caching, leveraging asynchronous processing, and monitoring performance data.ChatGPT is a powerful AI language model that has revolutionized the way we interact with natural language processing. However, as with any API, its performance can be optimized to ensure faster response times and a smoother user experience. In this blog post, we will delve into the intricacies of ChatGPT API response times, exploring the factors that influence its performance and the strategies that can be employed to enhance it. From understanding the nuances of response times to implementing efficient caching techniques and leveraging asynchronous processing for faster results, we will cover it all. Additionally, we’ll discuss the importance of monitoring and analyzing ChatGPT API performance data to continuously improve and tailor its performance. By the end of this post, you will have a comprehensive understanding of how to optimize ChatGPT API response times, ensuring seamless interactions and improved user satisfaction.

Contents

When it comes to using the ChatGPT API, one of the key factors to consider is the response times. This refers to the time it takes for the API to process a request and return a result. Understanding these response times is crucial for optimizing the performance of the API and ensuring a seamless experience for users.

There are several factors that can affect the response times of the ChatGPT API. These include the complexity of the request, the current load on the API servers, and the network latency between the client and the server. By understanding these factors and their impact on response times, developers can take steps to improve the overall performance of the API.

One strategy for optimizing response times is to implement efficient caching techniques. By caching frequently requested data, the API can reduce the need to process the same request multiple times, thereby improving response times and overall performance. Additionally, leveraging asynchronous processing for faster results can also help to optimize response times, allowing the API to handle multiple requests concurrently and return results more efficiently.

Monitoring and analyzing ChatGPT API performance data is also crucial for understanding response times. By tracking response times over time and identifying any patterns or trends, developers can gain insights into potential areas for improvement and make informed decisions about how to optimize the API for better performance.

When it comes to the performance of the ChatGPT API, there are several factors that can impact its overall efficiency. One of the most critical factors is server load. If the server is overloaded with requests, it can slow down the response times, leading to delays in processing user inputs. Additionally, network latency can also play a significant role in API performance. The speed at which data is transferred between the client and server can impact the overall response times.

Another factor that can affect the performance of the ChatGPT API is the complexity of requests. More complex requests require additional processing time, which can lead to slower response times. Similarly, the size of the response can also impact performance. Larger responses can take longer to transmit, especially over slower network connections.

Furthermore, inefficient coding and poorly optimized algorithms can also negatively impact API performance. Writing clean, efficient code and optimizing algorithms can lead to faster response times and improved overall performance. Additionally, hardware limitations can also play a role in API performance. If the server hardware is outdated or underpowered, it can lead to slower response times and decreased overall performance.

| Factors Affecting ChatGPT API Performance |

|---|

| Server Load |

| Network Latency |

| Complexity of Requests |

| Size of the Response |

| Inefficient Coding and Poorly Optimized Algorithms |

| Hardware Limitations |

When it comes to optimizing the ChatGPT API response, there are several strategies that developers can employ to ensure that the response times are as fast and efficient as possible. One of the key factors to consider is the server infrastructure that the API is running on. By using high-performance servers with adequate processing power and memory, developers can minimize response times and improve overall performance.

Another important consideration is network latency. By optimizing network configurations and utilizing content delivery networks (CDNs), developers can reduce the amount of time it takes for requests to reach the API and for responses to be delivered back to the client. Additionally, minimizing the amount of data transferred between the client and the API can help to further improve response times.

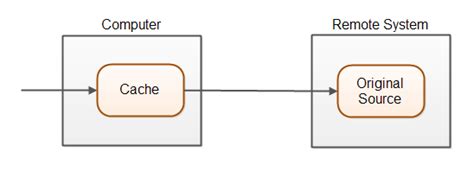

Implementing caching techniques is another effective strategy for optimizing ChatGPT API response. By caching frequently accessed data and pre-computed results, developers can reduce the need for repetitive processing and ultimately improve response times. This can be achieved using in-memory caching, distributed caching, and edge caching, among other techniques.

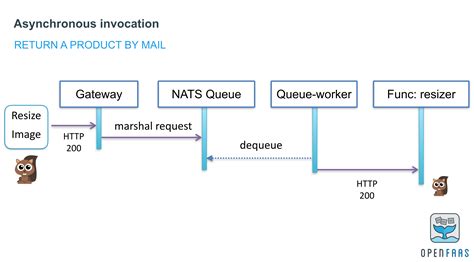

Furthermore, leveraging asynchronous processing can significantly improve response times for certain types of requests. By offloading time-consuming tasks to background processes and returning immediate responses to the client, developers can ensure a more responsive and efficient API. This can be particularly advantageous for handling large volumes of requests or complex computations.

When it comes to optimizing ChatGPT API performance, one of the most effective strategies is to implement efficient caching techniques. Caching involves storing frequently accessed data in a temporary location so that it can be quickly retrieved when needed. By utilizing caching, we can reduce the number of external API calls and improve response times for our users.

To effectively implement caching, it’s important to identify which data should be cached and for how long. By leveraging caching strategically, we can strike a balance between improving response times and ensuring that users receive the most up-to-date information. Additionally, we can utilize techniques such as asynchronous processing to update cached data in the background, further minimizing the impact on response times.

Using efficient caching techniques can have a significant impact on overall API performance. By reducing the need for repetitive data retrieval and processing, we can greatly improve the speed and efficiency of our API responses. Furthermore, monitoring and analyzing caching performance data can provide valuable insights for further optimization.

When it comes to optimizing the performance of the ChatGPT API, leveraging asynchronous processing can significantly improve response times and overall efficiency. Asynchronous processing allows tasks to be executed simultaneously, rather than sequentially, which can greatly reduce wait times for API requests. By implementing asynchronous processing, you can ensure that tasks are handled in parallel, leading to faster results and improved scalability.

One way to leverage asynchronous processing is by using technologies such as asyncio in Python, or async/await in JavaScript. These features allow for non-blocking I/O, which means that API requests can be made without waiting for a response, freeing up resources to handle other tasks. By utilizing these asynchronous processing techniques, you can improve the overall performance of the ChatGPT API and provide a more seamless experience for users.

In addition to reducing wait times, asynchronous processing can also help to optimize resource utilization and improve overall system efficiency. By handling tasks in parallel, you can make better use of available resources and ensure that the API is able to handle a larger volume of requests without slowing down or becoming unresponsive. This can be particularly beneficial in high-traffic scenarios, where the ability to process requests quickly and efficiently is crucial.

Overall, leveraging asynchronous processing for faster results is a crucial strategy for optimizing the performance of the ChatGPT API. By implementing these techniques, you can reduce wait times, improve scalability, and enhance overall system efficiency, leading to a better user experience and greater overall performance.

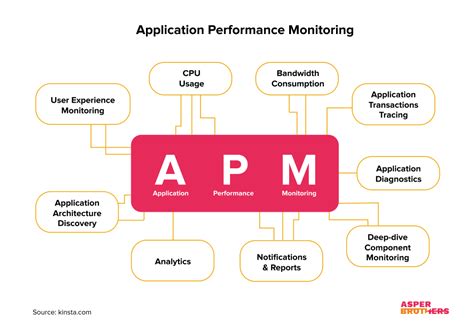

When it comes to ensuring the optimal performance of the ChatGPT API, monitoring and analyzing the performance data is absolutely crucial. By systematically recording and analyzing various performance metrics, developers can gain valuable insights into the API’s behavior and identify potential areas for improvement.

One effective way to monitor ChatGPT API performance data is by leveraging real-time monitoring tools. These tools allow developers to continuously track key performance indicators such as response times, error rates, and throughput. By staying informed about the API’s performance in real time, developers can quickly respond to any issues and proactively address any potential bottlenecks.

Furthermore, analyzing historical performance data can provide valuable insights into long-term trends and patterns. Developers can use this data to identify any consistent performance issues and optimize the API’s infrastructure accordingly. By understanding how the API’s performance has evolved over time, developers can make informed decisions about infrastructure scaling, resource allocation, and capacity planning.

Another valuable tool for monitoring and analyzing ChatGPT API performance data is the use of performance dashboards. These dashboards provide a visually intuitive way to monitor and analyze key performance metrics, allowing developers to quickly identify any performance anomalies or areas for improvement.

What is ChatGPT API?

ChatGPT API is a language model API that uses GPT-3 to generate human-like text responses based on the input provided.

How does optimizing response times benefit users?

Optimizing response times ensures that users receive quicker and more efficient responses from the ChatGPT API, leading to a better user experience.

What are some techniques for optimizing ChatGPT API response times?

Some techniques for optimizing response times include efficient caching of frequently requested data, minimizing unnecessary API calls, and using asynchronous processing for parallel tasks.

Can you provide examples of how to measure the performance of ChatGPT API?

Performance of ChatGPT API can be measured using metrics such as response time, throughput, error rates, and system resource utilization.

What are the typical challenges in optimizing API response times?

Challenges in optimizing API response times include balancing performance with resource consumption, handling concurrent requests efficiently, and maintaining consistency in response quality.

What role does caching play in optimizing response times for ChatGPT API?

Caching frequently requested data helps reduce the need for repeated computations, leading to faster responses and lower server load, thereby optimizing response times.

How can developers integrate optimization techniques for ChatGPT API response times into their applications?

Developers can integrate optimization techniques by using efficient algorithms, implementing appropriate data structures, and leveraging performance monitoring tools to identify bottlenecks.